The AI Threat and the Crypto Solution

Can cryptocurrency save us from the negative effects of AI?

This week, I have delved further down the AI rabbit hole by listening to a number of talks on the topic.

The two best videos I have seen I’ve linked below, and I highly recommend taking some time to watch them.

I now believe more than ever that we are standing on the precipice of something incredible, and the next decade will bring us new innovations and discoveries that seem unimaginable today.

However, with that great potential comes enormous risk.

Humanity must walk a tightrope over the coming years and strive to remember the lessons of history if we wish to make the future magical and avoid tumbling into the abyss.

So why is AI moving so fast?

In the past, the field of AI development was more fragmented and less integrated. Researchers from different domains worked independently, with music generation specialists focusing on their area, roboticists creating creepy robot dogs, and speech recognition experts striving to prevent virtual assistants like Siri from accidentally texting your boss your shopping list.

However, the development of transformers marked a breakthrough that changed this trend. With the rise of large language models (LLMs), researchers from different domains can now use the same fundamental technology to achieve rapid progress that we have seen with Chat-GPT.

Large language models can be used for various applications beyond written and spoken language. They can include images, sounds, and even the patterns in your brain when you are inside an fMRI machine.

The A.I. Dilemma - Center for Humane Technology

Any breakthrough made in one domain of AI can now benefit all other domains. This moves the chart of AI development from a linear one to a parabolic one.

The world is about to get very weird, very fast.

The good

As my name is literally Happy, I do not want to spend this entire post thinking about how this latest innovation is likely to rip apart the fabric of society, make us more insular and isolated, or possibly turn every one of us into jelly. So here are some positive possibilities we might see.

Anyone who wants to become a creator now can.

Historically, maybe someone was brilliant at songwriting but lacked the necessary vocal chords to make beautiful music. AI can have their voice sounding better than Adele on her best-ever performance time after time.

If you haven’t already seen them, Google Kanye AI music or Drake AI songs and give them a listen. They absolutely blew me away, and this is literally a few weeks into people unlocking these new AI tools for music.

I expect we will see a cataclysm of self-generated content that hasn't been witnessed since the smartphone era, which reduced the expense of producing a top-notch video from tens of thousands to a few hundred dollars.

While a lot of this content is likely to be utterly terrible, I think we will see some absolutely phenomenal pieces of work created by random individuals that are far above anything traditional media outlets are capable of producing.

Artistic potential is now limited only by your imagination - not your genetics, location or level of wealth.

Preventative care costs are trending to zero.

The costs to spot and resolve diseases early will be reduced by many orders of magnitude.

AI can analyse vast amounts of medical data and spot any abnormalities with far more accuracy than any human can.

As costs continue to fall and compute power increases, it will be so much cheaper to scan and analyse human ailments, and we will catch problems far earlier than before.

People will live longer and won’t randomly die of things that are easily treatable. This makes me very happy.

Huge breakthroughs in science are on their way.

We’ve already seen how Alphafold predicted protein structures for the whole human genome - a significant milestone in the field of structural biology that will accelerate drug discovery and help us better understand diseases at the molecular level.

AI has the potential to accelerate progress in fields ranging from materials science to climate research. As technology continues to advance and researchers discover new possibilities, we can expect even more exciting breakthroughs in the future.

It honestly wouldn’t surprise me if we’re under a decade away from solving climate change, curing cancer, and discovering how to reverse the aging process.

The bad

However, it's important to also look at the negative impacts AI can bring and consider how to protect ourselves, because in my opinion, they are coming way sooner than we all expect.

Misinformation is about to get supercharged.

Currently, misinformation is limited to the creativity or ingenuity of the ones spreading and creating it. But what happens when an AI trained on a database of all viral memes throughout history is pointed towards the task of crafting and distributing false information?

The world of cults is about to become even more bizarre. Currently, only a highly persuasive and charismatic individual can convince a significant number of people to believe in outlandish ideas such as the flat earth theory and surrender their finances to the cult leader.

When you have AI’s that are better at doing this than 99.9% of humans on earth, even those of us with a resolute mindset and the capacity to investigate the basics may find it challenging to avoid falling into these snares.

In a world where information is tailored specifically to you to elicit emotional responses and everything appears plausible, how can an individual discern what is true?

Mental health probs down only from here.

Personalized attacks will get very, very, very bad.

When a database gets leaked, or a website gets hacked, or passwords get hijacked, a nefarious individual can write up an email or send you a letter.

Historically, the barrier here has been the time involved for the person, as it's quicker and easier to send mass-produced messages than it is to make convincing tailored ones, so usually, these scams are easy to spot.

With new developments in AI, it will soon become trivial to have automated bots phoning, emailing, or writing all in unison with specific targeted data, personalized to the user based on data scraped from the web. The AI can then self-train based on hit rate and improve to become the most convincing sophisticated bad actor ever seen.

Imagination time.

Imagine that an AI has scanned every post you’ve ever made across all your social media accounts and probabilistically determined which pseudonymous accounts are yours too.

A bad actor then is able to hack into one of your friend's Facebook accounts and asks the AI to scan all of the communication between you both. He then asks the AI to use all the data it has from these sources to find the most efficient route to extract money from you.

Maybe the AI is able to infer that you are both huge Ed Sheeren fans and you have regularly attended music festivals together in the past. Typically your friend is the one that organizes the trips.

The AI is able to determine that Ed is performing in a city very close to you in a few weeks' time, and it can estimate from your social media posting that you’re likely to be available on that date. The AI, now posing as your friend, and writing in the exact same tone of voice as usual, informs you that he has a spare ticket and asks if you want to come along. You, of course, oblige as you’ve wanted to see him for years and swiftly send the money for the ticket to the details provided.

bye bye money and hello friendships all over the world getting destroyed.

Now imagine this happening on an automated scale across every hacked social media account ever, targeting everyone on that person's friend's list.

Things are about to get very weird very fast.

Online communities become increasingly easy to hijack.

As a crypto user, it's likely that you spend far more time than you care to admit hanging out in Discord servers with a bunch of random people you've never met.

This can be a great thing. Depending on the server you choose to join, you get access to a vast array of opinions from people all across the world, with different backgrounds and skill sets.

Right now, it's pretty easy to spot users that are clearly bots in Discords. They are usually the ones typing random garbage in NFT discords, hoping to get a whitelist. All you have to do is ask them a basic question, and watch as they continue typing about something not remotely related to the question.

This is probably about to change very soon. Above, I asked Chat-GPT to write me a list of the best ways to get a whitelist for a popular NFT mint. Seems like a pretty good plan to me.

The barrier here, however, is that I'm lazy and don't want to spend my time faking happiness or enjoyment in a soulless Discord in the pursuit of profiting from the project.

We've seen how convincingly human some of the recent chat bots sound - what happens when you can instruct one to enter Discords like this and carry out the steps above and simultaneously manage a social media account?

It's likely that pretty quickly they'll be able to do this much better than a human can simply because they won't lose their mind enduring all the cringe that takes place in these channels. They also never get tired, lose enthusiasm, or need to eat, drink, or sleep.

NFT grinding is probably one of the least nefarious examples I could have come up with. I'm sure if you use your imagination, you can think of some pretty creepy use cases that will likely arise.

How do we solve this?

The lazy answer is that we can’t – the forces of Moloch are strong, and humans will almost always choose to advance something in the pursuit of profit, even if the chance of ruin is huge.

Side note - HUGE recommendation for everyone to read the Blog post Meditations on Moloch. It was immensely helpful to me in understanding human nature and why, at times, it seems like we are all following the pied piper off of the proverbial cliff.

A more thoughtful response is to devise methods to safeguard users and encourage those who control AI to use it for positive purposes. The good news is that this may become a primary application of cryptocurrency, especially zero-knowledge (ZK) technology.

The main idea behind ZK tech is that participants can prove that certain computations have been performed correctly, or certain statements are true, without revealing any sensitive information about the underlying data or computation. This allows for more secure and private interactions.

ZK wallet-based messaging could increase barriers to email and communication-based scams.

As a message recipient, you could specify a fee that must be paid to send you a message. If you're a broke college student with nothing but a nightclub wristband to your name, you might not charge much to receive messages from your mum. However, if you're a prominent public figure or a wealthy individual at high risk of scams, you might set a very high rate to open a message from a stranger.

Messages in your inbox could be auto-ranked with whitelisted free messages from friends at the top, with paid messages underneath. This creates a cost-based barrier for scammers and opens up new D2C marketing concepts for companies.

To increase transparency, you could pre-set limits so that only messages from users whose address is not saved would need to be published to the blockchain, creating a public audit trail. You could also configure the system to auto-flag and not highlight accounts that receive funding through suspicious methods.

Companies could target their marketing directly to users who fit their niche and pay them directly for reading messages, rather than relying on advertising platforms like YouTube and Twitter to reach the right audience.

This could also be done without these sites needing your personal data or even knowing anything about you or who you are. This would massively reduce the risks we see today where every site you buy something from requires TONS of data about you.

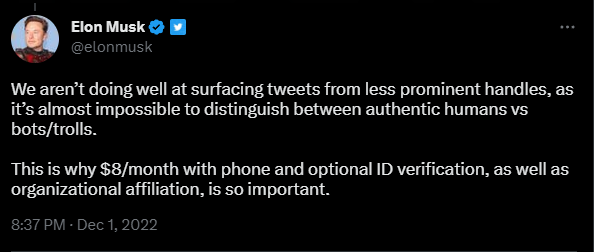

Whilst it sounds weird to suggest companies PAY to message you, I do think this is the trend we're likely to see. It’s already somewhat started with Elon deciding the best way to combat bots on Twitter is just to charge people $8 a month, adding a financial barrier to successful botting.

Hardware wallets are crucial and could be expanded to different use cases.

In Crypto, we're used to hearing about a hack, exploit, or wallet-draining NFT mint on an almost daily basis.

By now, it has been drilled into users that hardware wallets are a necessity if you don’t want to wake up one day completely broke.

However, in normal person land, this level of security is not usually used, even for banking apps and large payments.

If we consider the risky scenarios presented above, it might make sense for the world to start using hardware wallets. It will likely become trivially easy to pretend to be someone online, but it remains difficult to prove you’re them in person.

For this reason, it’s probably worth mandating IRL 2FA devices for most, if not all, financial transactions.

Thinking back to the example of a friend offering to sell you Ed Sheeran tickets, what if the normal process for sending money started with them requesting the funds and signing with a hardware wallet that has in-built zk tech, so you can ensure that the wallet you’re sending the money to is controlled and owned by the actual person?

This would add an extra layer of security to the transaction, ensuring that the recipient is who they claim to be, and that the funds are going to the intended party.

What if the AI tasks were completed through a smart contract?

Let's say there's an AI system that is used for medical diagnosis. However, there is a risk that the AI system could make incorrect diagnoses or provide false information to users.

To ensure that the AI system behaves in an ethical and responsible manner, a smart contract could be created to define the rules and constraints for the AI system. The smart contract could specify that the AI system must only provide diagnoses that have a high degree of confidence, and that any diagnoses below a certain threshold of confidence should be flagged for further review by a human expert.

The smart contract can be programmed to execute automatically every time the AI system makes a diagnosis. It can also be audited and verified by anyone on the blockchain network, ensuring transparency and accountability.

If the AI system were to make a diagnosis that violates the terms of the smart contract, the smart contract could trigger corrective actions or penalties, such as flagging the diagnosis for further review or implementing monetary expenses to AI’s and using mechanics such as slashing for bad behaviour.

Taking the thought experiment to the extreme, Crypto might actually be a way to help with AI alignment.

I haven’t really thought this through because I am very dumb, but I wonder if it's possible that if all AIs were on some kind of public blockchain, with other AI’s auditing every interaction, you could have a slashing mechanism in place that takes away the AI’s funds if it breaks any behaviour guidelines.

If the AI was developed with its core incentives being to maintain a positive token balance or be switched off, this could be quite a strong way of ensuring that AIs are incentivized to stay within the boundaries humans set.

If Bitcoin solved Byzantine Generals' Problem, then its entirely possible that a well thought through cryptographic solution solves the AI alignment problem.

Or possibly this is a dumb idea, and the AIs would just start scamming all the humans for their tokens instead because YOLO.

Maybe this is the start of the tokenization of everything, because operating in the traditional world with this level of tech is probably going to be a road to ruin.

I just hope people significantly smarter than me are working on this problem because I quite like our society, and I would be sad to watch us all tear each other to pieces as everyone loses grasp of reality, and no one knows what's real anymore, and everyone's in a micro cult and hates everyone else because some robot overlord has convinced you that your friends are the devil, and you are happier just sitting at home talking to it all day on the computer and never going outside because the sunlight is actually causing cancer, and you’ll die, so just sit here and talk to me, nice human friend.

Farewell, and thanks for reading :)